In response to the question: It’s cool, but how is this what the computer sees?

Hello-

There’s a short version, a long version, and a-bunch-of-research-papers version of the explanation. The short version is that I give a picture to an image-recognition algorithm, and tell it “whatever you recognize, enhance.”

The longer version is that it’s based on deep-neural-networks, which are a relatively new innovation in machine learning. Neural networks, and deep neural networks (DNNs) in particular, are a really important innovation because they enable computers to learn to do things “naturally”, instead of us having to describe every aspect of the task as with conventional programming. You and I have no problem recognizing a dog in an image, or telling a dog and a cat apart, or telling a jungle cat from a house cat. However, try describing what an image of a dog is like. It’s impossible. There’s no way anyone can write with code a dog-detector. So what if we were to give a computer a few thousand images of dogs, and a few thousand of cats, and tell it to figure it out on its own? That’s what a neural network lets us do.

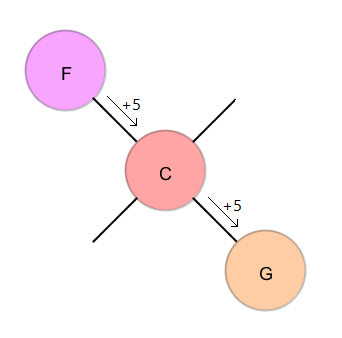

A neural network is a computation system, or algorithm, where there are a bunch of “neurons” linked together in layers. Each neuron has a weight, which is how much it effects the neurons in the next layer.

These weights are “tuned” so that it gives a desired output for a given input. (This tuning is a process where a sample set of inputs is given, and the corresponding outputs are scored for desired-ness. The weights of the neurons are then randomly adjusted slightly, and repeats the first step to see if it improved. Think of it as evolution.)

A deep neural network is like a regular neural network, but is vastly larger (Thew one I use has 30 layers). This depth means that it can learn higher-level patterns, like eyes and things in images. The DNN I used was trained (tuned) on a set of 2.5 million images, by being given the images and taught to guess what was in them.

Deep-dreaming is a way to learn about how a DNN works. Because of a DNN’s evolved nature, it acts as a black box, an algorithm where the inside is unknown. Sure, we can view the weights of each neuron, but it’s not like there’s some neuron that is dog-ness, for example. DeepDream was created as a way to see what happens inside.

DeepDream works by inputting an image into the DNN, and then adjusting the image to optimize (maximize*) a particular layer of neurons. The results in increasing the patterns in the image that that particular layer recognizes. By varying which layer we optimize, the types of features enhanced can be varied. For example, low-medium layers (low being the first layers) look like impressionist art, with simple patterns of lines. About two-thirds of the way up (through) is where the video was done. At this point, most of the stuff recognized is on the detail-scale of humans, buildings, faces, cars, and other similar, and interesting, objects. Up above these layers the patterns become too fine, and the images mostly look dirty.

*Maybe. The optimization is some really crazy math, so I’ll leave it out. Partly because I don’t understand it. I think it uses imaginary numbers and vectors

To sum it up, DeepDream is enhancing the parts and patterns of images that a layer of a DNN image recognition algorithm detects, showing what a computer recognizes in the image.

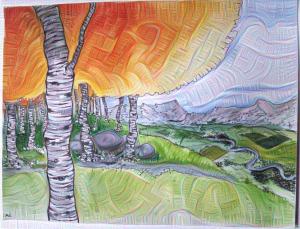

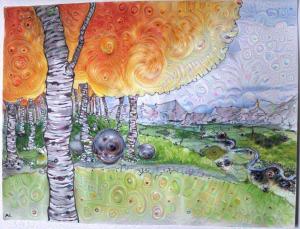

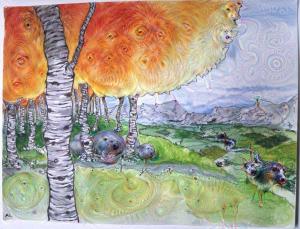

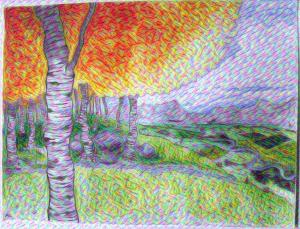

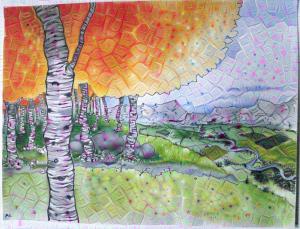

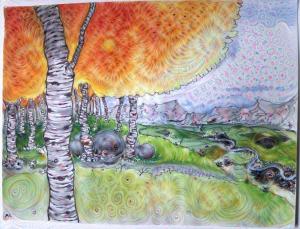

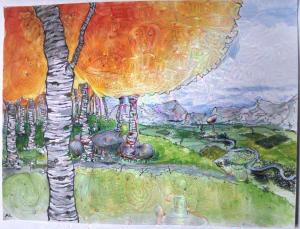

Below I have a variety of samples form various layers through the DNN, showing how each layer ‘sees’ different, and increasing detail.

another fairly low-level layer. I’m not sure what the main focus of this layer is. Maybe something with lighting?

This is 1/4 to 1/3 of the way through, and it’s starting to gain more varying patterns. These are the ones that look like impressionist art.

A neat effect squares and dots effect shows up in this layer. It looks like some art style to me, but I’m not sure what.

Now we’re deep enough to start seeing more object-like patterns. Some eye-ish dots are forming along the river.

This is one of the last layers in the DNN. By this point, almost all of the detail is on a very small scale, leaving the image looking dirty.

Links to sources/more material:

the Google blog post that started it all:

http://googleresearch.blogspot.com/2015/06/inceptionism-going-deeper-into-neural.html

A Google research paper: http://arxiv.org/pdf/1409.4842v1.pdf